mirror of

https://github.com/YunoHost-Apps/keeweb_ynh.git

synced 2024-09-03 19:26:33 +02:00

Refresh app

- Install from source - Config - 2.4

This commit is contained in:

parent

e69432b507

commit

f2ecb1b715

17 changed files with 153 additions and 1622 deletions

44

LICENSE

44

LICENSE

|

|

@ -616,46 +616,4 @@ an absolute waiver of all civil liability in connection with the

|

||||||

Program, unless a warranty or assumption of liability accompanies a

|

Program, unless a warranty or assumption of liability accompanies a

|

||||||

copy of the Program in return for a fee.

|

copy of the Program in return for a fee.

|

||||||

|

|

||||||

END OF TERMS AND CONDITIONS

|

END OF TERMS AND CONDITIONS

|

||||||

|

|

||||||

How to Apply These Terms to Your New Programs

|

|

||||||

|

|

||||||

If you develop a new program, and you want it to be of the greatest

|

|

||||||

possible use to the public, the best way to achieve this is to make it

|

|

||||||

free software which everyone can redistribute and change under these terms.

|

|

||||||

|

|

||||||

To do so, attach the following notices to the program. It is safest

|

|

||||||

to attach them to the start of each source file to most effectively

|

|

||||||

state the exclusion of warranty; and each file should have at least

|

|

||||||

the "copyright" line and a pointer to where the full notice is found.

|

|

||||||

|

|

||||||

<one line to give the program's name and a brief idea of what it does.>

|

|

||||||

Copyright (C) <year> <name of author>

|

|

||||||

|

|

||||||

This program is free software: you can redistribute it and/or modify

|

|

||||||

it under the terms of the GNU Affero General Public License as published by

|

|

||||||

the Free Software Foundation, either version 3 of the License, or

|

|

||||||

(at your option) any later version.

|

|

||||||

|

|

||||||

This program is distributed in the hope that it will be useful,

|

|

||||||

but WITHOUT ANY WARRANTY; without even the implied warranty of

|

|

||||||

MERCHANTABILITY or FITNESS FOR A PARTICULAR PURPOSE. See the

|

|

||||||

GNU Affero General Public License for more details.

|

|

||||||

|

|

||||||

You should have received a copy of the GNU Affero General Public License

|

|

||||||

along with this program. If not, see <http://www.gnu.org/licenses/>.

|

|

||||||

|

|

||||||

Also add information on how to contact you by electronic and paper mail.

|

|

||||||

|

|

||||||

If your software can interact with users remotely through a computer

|

|

||||||

network, you should also make sure that it provides a way for users to

|

|

||||||

get its source. For example, if your program is a web application, its

|

|

||||||

interface could display a "Source" link that leads users to an archive

|

|

||||||

of the code. There are many ways you could offer source, and different

|

|

||||||

solutions will be better for different programs; see section 13 for the

|

|

||||||

specific requirements.

|

|

||||||

|

|

||||||

You should also get your employer (if you work as a programmer) or school,

|

|

||||||

if any, to sign a "copyright disclaimer" for the program, if necessary.

|

|

||||||

For more information on this, and how to apply and follow the GNU AGPL, see

|

|

||||||

<http://www.gnu.org/licenses/>.

|

|

||||||

|

|

@ -1,18 +1,14 @@

|

||||||

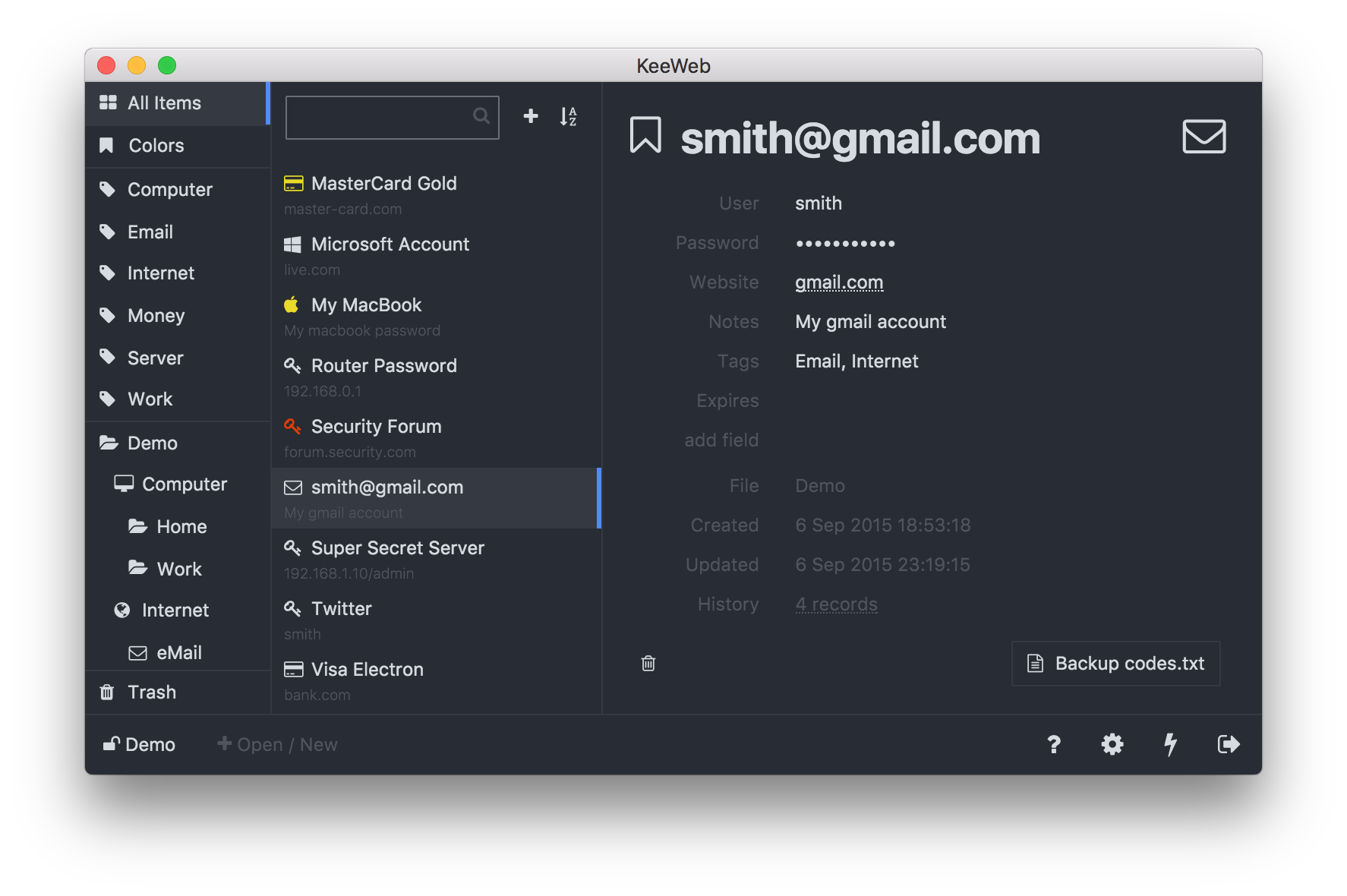

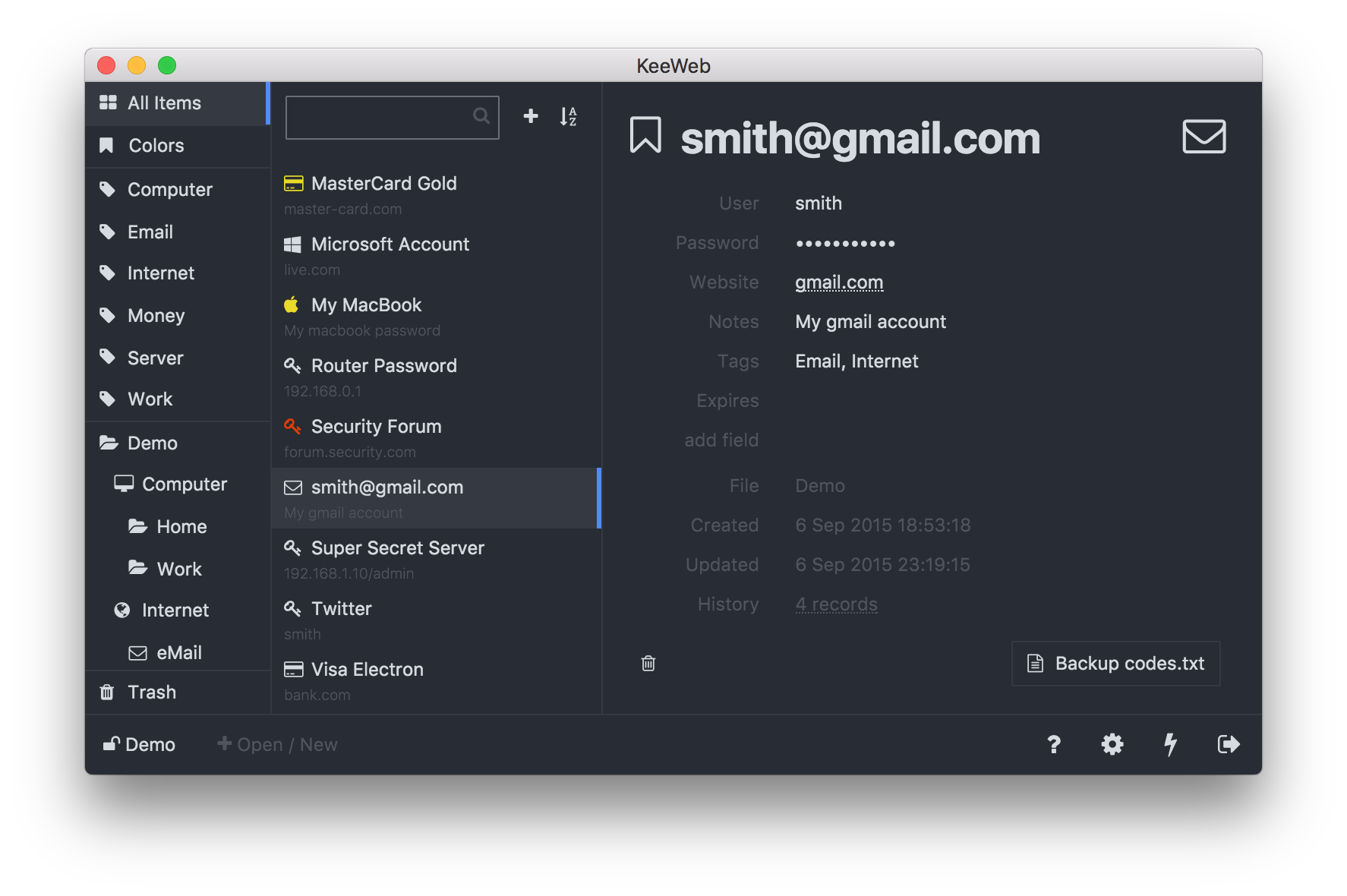

# Keeweb for YunoHost #

|

# Keeweb for YunoHost #

|

||||||

|

|

||||||

Web client for reading and editing Keepass files locally. It can also sync with Owncloud, Google Drive and Dropbox.

|

Web client for reading and editing Keepass files locally. It can also sync with WebDAV (Owncloud, Nextcloud...), Dropbox, Google Drive, OneDrive...

|

||||||

|

|

||||||

|

|

||||||

**Current version: 1.1.4**

|

|

||||||

|

|

||||||

## Usage with OwnCloud ##

|

## Usage with OwnCloud ##

|

||||||

1. Open your file through webdav using https://linktoowncloud/remote.php/webdav and your username and password

|

1. Open your file through webdav using https://linktoowncloud/remote.php/webdav and your username and password

|

||||||

|

|

||||||

## Usage for Dropbox sync ##

|

## Usage for Dropbox sync ##

|

||||||

1. [create](https://www.dropbox.com/developers/apps/create) a Dropbox app

|

1. [create](https://www.dropbox.com/developers/apps/create) a Dropbox app

|

||||||

2. find your app key (in Dropbox App page, go to Settings/App key)

|

2. find your app key (in Dropbox App page, go to Settings/App key)

|

||||||

3. Enter the app key during install

|

|

||||||

|

|

||||||

|

|

||||||

|

|

||||||

**More information on the documentation page:**

|

**More information on the documentation page:**

|

||||||

|

|

|

||||||

42

conf/config.json

Normal file

42

conf/config.json

Normal file

|

|

@ -0,0 +1,42 @@

|

||||||

|

{

|

||||||

|

"settings": {

|

||||||

|

"theme": "fb",

|

||||||

|

"locale": "en",

|

||||||

|

"expandGroups": true,

|

||||||

|

"listViewWidth": null,

|

||||||

|

"menuViewWidth": null,

|

||||||

|

"tagsViewHeight": null,

|

||||||

|

"autoUpdate": "install",

|

||||||

|

"autoSave": true,

|

||||||

|

"rememberKeyFiles": false,

|

||||||

|

"idleMinutes": 15,

|

||||||

|

"tableView": false,

|

||||||

|

"colorfulIcons": false,

|

||||||

|

"lockOnCopy": false,

|

||||||

|

"skipOpenLocalWarn": false,

|

||||||

|

"hideEmptyFields": false,

|

||||||

|

"skipHttpsWarning": true,

|

||||||

|

"demoOpened": false,

|

||||||

|

"fontSize": 0,

|

||||||

|

"tableViewColumns": null,

|

||||||

|

"generatorPresets": null,

|

||||||

|

|

||||||

|

"canOpen": true,

|

||||||

|

"canOpenDemo": true,

|

||||||

|

"canOpenSettings": true,

|

||||||

|

"canCreate": true,

|

||||||

|

"canImportXml": true,

|

||||||

|

|

||||||

|

"dropbox": true,

|

||||||

|

"dropboxFolder": null,

|

||||||

|

"dropboxAppKey": null,

|

||||||

|

|

||||||

|

"webdav": true,

|

||||||

|

|

||||||

|

"gdrive": true,

|

||||||

|

"gdriveClientId": null,

|

||||||

|

|

||||||

|

"onedrive": true,

|

||||||

|

"onedriveClientId": null

|

||||||

|

}

|

||||||

|

}

|

||||||

|

|

@ -1,26 +1,25 @@

|

||||||

{

|

{

|

||||||

"package_format": 1,

|

|

||||||

"name": "Keeweb",

|

"name": "Keeweb",

|

||||||

"id": "keeweb",

|

"id": "keeweb",

|

||||||

|

"packaging_format": 1,

|

||||||

"description": {

|

"description": {

|

||||||

"en": "Password manager compatible with KeePass.",

|

"en": "Password manager compatible with KeePass.",

|

||||||

"fr": "Gestionnaire de mots de passe compatible avec KeePass."

|

"fr": "Gestionnaire de mots de passe compatible avec KeePass."

|

||||||

},

|

},

|

||||||

"url": "https://keeweb.info/",

|

"url": "https://keeweb.info/",

|

||||||

"license": "AGPL-3",

|

"license": "free",

|

||||||

"version": "1.1.4",

|

|

||||||

"maintainer": {

|

"maintainer": {

|

||||||

"name": "Scith",

|

"name": "Scith",

|

||||||

"url": "https://github.com/scith"

|

"url": "https://github.com/scith"

|

||||||

},

|

},

|

||||||

"multi_instance": "false",

|

"requirements": {

|

||||||

|

"yunohost": ">> 2.4.0"

|

||||||

|

},

|

||||||

|

"multi_instance": true,

|

||||||

"services": [

|

"services": [

|

||||||

"nginx",

|

"nginx",

|

||||||

"php5-fpm"

|

"php5-fpm"

|

||||||

],

|

],

|

||||||

"requirements": {

|

|

||||||

"yunohost": ">= 2.2"

|

|

||||||

},

|

|

||||||

"arguments": {

|

"arguments": {

|

||||||

"install" : [

|

"install" : [

|

||||||

{

|

{

|

||||||

|

|

@ -39,17 +38,17 @@

|

||||||

"en": "Choose a path for Keeweb",

|

"en": "Choose a path for Keeweb",

|

||||||

"fr": "Choisissez un chemin pour Keeweb"

|

"fr": "Choisissez un chemin pour Keeweb"

|

||||||

},

|

},

|

||||||

"example": "/passwords",

|

"example": "/keeweb",

|

||||||

"default": "/passwords"

|

"default": "/keeweb"

|

||||||

},

|

},

|

||||||

{

|

{

|

||||||

"name": "is_public",

|

"name": "is_public",

|

||||||

|

"type": "boolean",

|

||||||

"ask": {

|

"ask": {

|

||||||

"en": "Is it a public application?",

|

"en": "Is it a public application?",

|

||||||

"fr": "Est-ce une application publique ?"

|

"fr": "Est-ce une application publique ?"

|

||||||

},

|

},

|

||||||

"choices": ["Yes", "No"],

|

"default": true

|

||||||

"default": "No"

|

|

||||||

}

|

}

|

||||||

]

|

]

|

||||||

}

|

}

|

||||||

|

|

|

||||||

|

|

@ -1,18 +1,16 @@

|

||||||

#!/bin/bash

|

#!/bin/bash

|

||||||

|

|

||||||

# causes the shell to exit if any subcommand or pipeline returns a non-zero status

|

# Exit on command errors and treat unset variables as an error

|

||||||

set -e

|

set -eu

|

||||||

|

|

||||||

app=keeweb

|

app=$YNH_APP_INSTANCE_NAME

|

||||||

|

|

||||||

# The parameter $1 is the backup directory location which will be compressed afterward

|

# Source YunoHost helpers

|

||||||

backup_dir=$1/apps/$app

|

source /usr/share/yunohost/helpers

|

||||||

sudo mkdir -p $backup_dir

|

|

||||||

|

|

||||||

# Backup sources & data

|

# Backup sources & data

|

||||||

sudo cp -a /var/www/$app/. $backup_dir/sources

|

ynh_backup "/var/www/${app}" "sources"

|

||||||

|

|

||||||

# Copy Nginx and YunoHost parameters to make the script "standalone"

|

# Copy NGINX configuration

|

||||||

sudo cp -a /etc/yunohost/apps/$app/. $backup_dir/yunohost

|

domain=$(ynh_app_setting_get "$app" domain)

|

||||||

domain=$(sudo yunohost app setting $app domain)

|

ynh_backup "/etc/nginx/conf.d/${domain}.d/${app}.conf" "nginx.conf"

|

||||||

sudo cp -a /etc/nginx/conf.d/$domain.d/$app.conf $backup_dir/nginx.conf

|

|

||||||

|

|

|

||||||

|

|

@ -1,42 +1,51 @@

|

||||||

#!/bin/bash

|

#!/bin/bash

|

||||||

|

|

||||||

# causes the shell to exit if any subcommand or pipeline returns a non-zero status

|

# Exit on command errors and treat unset variables as an error

|

||||||

set -e

|

set -eu

|

||||||

|

|

||||||

app=keeweb

|

|

||||||

|

|

||||||

|

app=$YNH_APP_INSTANCE_NAME

|

||||||

|

|

||||||

|

# Source YunoHost helpers

|

||||||

|

source /usr/share/yunohost/helpers

|

||||||

|

|

||||||

# Retrieve arguments

|

# Retrieve arguments

|

||||||

domain=$1

|

domain=$YNH_APP_ARG_DOMAIN

|

||||||

path=$2

|

path=$YNH_APP_ARG_PATH

|

||||||

is_public=$3

|

is_public=$YNH_APP_ARG_IS_PUBLIC

|

||||||

|

|

||||||

|

# Remove trailing "/" for next commands

|

||||||

|

path=${path%/}

|

||||||

|

|

||||||

# Save app settings

|

# Save app settings

|

||||||

sudo yunohost app setting $app is_public -v "$is_public"

|

ynh_app_setting_set "$app" is_public "$is_public"

|

||||||

|

|

||||||

# Check domain/path availability

|

# Check domain/path availability

|

||||||

sudo yunohost app checkurl $domain$path -a $app \

|

sudo yunohost app checkurl "${domain}${path}" -a "$app" \

|

||||||

|| (echo "Path not available: $domain$path" && exit 1)

|

|| ynh_die "Path not available: ${domain}${path}"

|

||||||

|

|

||||||

|

# Download source

|

||||||

|

sudo wget https://raw.githubusercontent.com/keeweb/keeweb/gh-pages/index.html

|

||||||

|

sudo wget https://raw.githubusercontent.com/keeweb/keeweb/gh-pages/manifest.appcache

|

||||||

|

sudo sed -i.bak 's/(no-config)/config.json/g' index.html

|

||||||

|

|

||||||

# Copy source files

|

# Copy source files

|

||||||

final_path=/var/www/$app

|

src_path=/var/www/$app

|

||||||

sudo mkdir -p $final_path

|

sudo mkdir -p $src_path

|

||||||

sudo cp -a ../sources/. $final_path

|

sudo cp -a index.html manifest.appcache ../conf/config.json $src_path

|

||||||

|

|

||||||

# Set permissions to app files

|

# Set permissions to app files

|

||||||

sudo chown -R root:root $final_path

|

sudo chown -R root: $src_path

|

||||||

|

|

||||||

# Modify Nginx configuration file and copy it to Nginx conf directory

|

# Modify Nginx configuration file and copy it to Nginx conf directory

|

||||||

sed -i "s@YNH_WWW_PATH@$path@g" ../conf/nginx.conf

|

sed -i "s@YNH_WWW_PATH@$path@g" ../conf/nginx.conf

|

||||||

sed -i "s@YNH_WWW_ALIAS@$final_path/@g" ../conf/nginx.conf

|

sed -i "s@YNH_WWW_ALIAS@$src_path/@g" ../conf/nginx.conf

|

||||||

sudo cp ../conf/nginx.conf /etc/nginx/conf.d/$domain.d/$app.conf

|

sudo cp ../conf/nginx.conf /etc/nginx/conf.d/$domain.d/$app.conf

|

||||||

|

|

||||||

# If app is public, add url to SSOWat conf as skipped_uris

|

# If app is public, add url to SSOWat conf as skipped_uris

|

||||||

if [ "$is_public" = "Yes" ];

|

if [[ $is_public -eq 1 ]]; then

|

||||||

then

|

|

||||||

# unprotected_uris allows SSO credentials to be passed anyway.

|

# unprotected_uris allows SSO credentials to be passed anyway.

|

||||||

sudo yunohost app setting $app unprotected_uris -v "/"

|

ynh_app_setting_set "$app" unprotected_uris "/"

|

||||||

fi

|

fi

|

||||||

|

|

||||||

# Restart services

|

# Restart services

|

||||||

sudo service nginx reload

|

sudo service nginx reload

|

||||||

sudo yunohost app ssowatconf

|

|

||||||

|

|

|

||||||

|

|

@ -1,18 +1,18 @@

|

||||||

#!/bin/bash

|

#!/bin/bash

|

||||||

app=keeweb

|

|

||||||

|

app=$YNH_APP_INSTANCE_NAME

|

||||||

|

|

||||||

|

# Source YunoHost helpers

|

||||||

|

source /usr/share/yunohost/helpers

|

||||||

|

|

||||||

# Retrieve arguments

|

# Retrieve arguments

|

||||||

domain=$(sudo yunohost app setting $app domain)

|

domain=$(ynh_app_setting_get "$app" domain)

|

||||||

path=$(sudo yunohost app setting $app path)

|

|

||||||

dropbox=$(sudo yunohost app setting $app dropbox)

|

|

||||||

is_public=$(sudo yunohost app setting $app is_public)

|

|

||||||

|

|

||||||

# Remove sources

|

# Remove sources

|

||||||

sudo rm -rf /var/www/$app

|

sudo rm -rf /var/www/$app

|

||||||

|

|

||||||

# Remove configuration files

|

# Remove nginx configuration file

|

||||||

sudo rm -f /etc/nginx/conf.d/$domain.d/$app.conf

|

sudo rm -f /etc/nginx/conf.d/$domain.d/$app.conf

|

||||||

|

|

||||||

# Restart services

|

# Restart services

|

||||||

sudo service nginx reload

|

sudo service nginx reload

|

||||||

sudo yunohost app ssowatconf

|

|

||||||

|

|

@ -1,23 +1,30 @@

|

||||||

#!/bin/bash

|

#!/bin/bash

|

||||||

|

|

||||||

# causes the shell to exit if any subcommand or pipeline returns a non-zero status

|

# Exit on command errors and treat unset variables as an error

|

||||||

set -e

|

set -eu

|

||||||

|

|

||||||

app=keeweb

|

app=$YNH_APP_INSTANCE_NAME

|

||||||

|

|

||||||

# The parameter $1 is the uncompressed restore directory location

|

# Source YunoHost helpers

|

||||||

backup_dir=$1/apps/$app

|

source /usr/share/yunohost/helpers

|

||||||

|

|

||||||

|

# Retrieve old app settings

|

||||||

|

domain=$(ynh_app_setting_get "$app" domain)

|

||||||

|

path=$(ynh_app_setting_get "$app" path)

|

||||||

|

|

||||||

|

# Check domain/path availability

|

||||||

|

sudo yunohost app checkurl "${domain}${path}" -a "$app" \

|

||||||

|

|| ynh_die "Path not available: ${domain}${path}"

|

||||||

|

|

||||||

# Restore sources & data

|

# Restore sources & data

|

||||||

sudo cp -a $backup_dir/sources/. /var/www/$app

|

src_path="/var/www/${app}"

|

||||||

|

sudo cp -a ./sources "$src_path"

|

||||||

|

|

||||||

# Restore permissions to app files

|

# Restore permissions to app files

|

||||||

sudo chown -R root:root $final_path

|

sudo chown -R root: "$src_path"

|

||||||

|

|

||||||

# Restore Nginx and YunoHost parameters

|

# Restore NGINX configuration

|

||||||

sudo cp -a $backup_dir/yunohost/. /etc/yunohost/apps/$app

|

sudo cp -a ./nginx.conf "/etc/nginx/conf.d/${domain}.d/${app}.conf"

|

||||||

domain=$(sudo yunohost app setting $app domain)

|

|

||||||

sudo cp -a $backup_dir/nginx.conf /etc/nginx/conf.d/$domain.d/$app.conf

|

|

||||||

|

|

||||||

# Restart webserver

|

# Restart webserver

|

||||||

sudo service nginx reload

|

sudo service nginx reload

|

||||||

|

|

|

||||||

|

|

@ -1,40 +1,55 @@

|

||||||

#!/bin/bash

|

#!/bin/bash

|

||||||

|

|

||||||

# causes the shell to exit if any subcommand or pipeline returns a non-zero status

|

# Exit on command errors and treat unset variables as an error

|

||||||

set -e

|

set -eu

|

||||||

|

|

||||||

app=keeweb

|

app=$YNH_APP_INSTANCE_NAME

|

||||||

|

|

||||||

|

# Source YunoHost helpers

|

||||||

|

source /usr/share/yunohost/helpers

|

||||||

|

|

||||||

# Retrieve arguments

|

# Retrieve arguments

|

||||||

domain=$(sudo yunohost app setting $app domain)

|

domain=$(ynh_app_setting_get "$app" domain)

|

||||||

path=$(sudo yunohost app setting $app path)

|

path=$(ynh_app_setting_get "$app" path)

|

||||||

is_public=$(sudo yunohost app setting $app is_public)

|

is_public=$(ynh_app_setting_get "$app" is_public)

|

||||||

|

|

||||||

# Remove trailing "/" for next commands

|

# Remove trailing "/" for next commands

|

||||||

path=${path%/}

|

path=${path%/}

|

||||||

|

|

||||||

|

# Check domain/path availability

|

||||||

|

sudo yunohost app checkurl "${domain}${path}" -a "$app" \

|

||||||

|

|| ynh_die "Path not available: ${domain}${path}"

|

||||||

|

|

||||||

|

# Download source

|

||||||

|

sudo wget https://raw.githubusercontent.com/keeweb/keeweb/gh-pages/index.html

|

||||||

|

sudo wget https://raw.githubusercontent.com/keeweb/keeweb/gh-pages/manifest.appcache

|

||||||

|

sudo sed -i.bak 's/(no-config)/config.json/g' index.html

|

||||||

|

|

||||||

# Copy source files

|

# Copy source files

|

||||||

final_path=/var/www/$app

|

src_path=/var/www/$app

|

||||||

sudo mkdir -p $final_path

|

sudo mkdir -p $src_path

|

||||||

sudo cp -a ../sources/. $final_path

|

sudo cp -a index.html manifest.appcache ../conf/config.json $src_path

|

||||||

|

|

||||||

# Set permissions to app files

|

# Set permissions to app files

|

||||||

sudo chown -R root:root $final_path

|

sudo chown -R root: $src_path

|

||||||

|

|

||||||

# Modify Nginx configuration file and copy it to Nginx conf directory

|

# Modify Nginx configuration file and copy it to Nginx conf directory

|

||||||

sed -i "s@YNH_WWW_PATH@$path@g" ../conf/nginx.conf

|

sed -i "s@YNH_WWW_PATH@$path@g" ../conf/nginx.conf

|

||||||

sed -i "s@YNH_WWW_ALIAS@$final_path/@g" ../conf/nginx.conf

|

sed -i "s@YNH_WWW_ALIAS@$src_path/@g" ../conf/nginx.conf

|

||||||

sudo cp ../conf/nginx.conf /etc/nginx/conf.d/$domain.d/$app.conf

|

sudo cp ../conf/nginx.conf /etc/nginx/conf.d/$domain.d/$app.conf

|

||||||

|

|

||||||

|

# Migrate old apps

|

||||||

|

if [ "$is_public" = "Yes" ]; then

|

||||||

|

ynh_app_setting_set "$app" is_public "true"

|

||||||

|

elif [ "$is_public" = "No" ]; then

|

||||||

|

ynh_app_setting_set "$app" is_public "false"

|

||||||

|

fi

|

||||||

|

|

||||||

# If app is public, add url to SSOWat conf as skipped_uris

|

# If app is public, add url to SSOWat conf as skipped_uris

|

||||||

if [ "$is_public" = "Yes" ];

|

if [[ $is_public -eq 1 ]]; then

|

||||||

then

|

# unprotected_uris allows SSO credentials to be passed anyway.

|

||||||

# See install script

|

ynh_app_setting_set "$app" unprotected_uris "/"

|

||||||

sudo yunohost app setting $app unprotected_uris -v "/"

|

|

||||||

# Remove old settings

|

|

||||||

sudo yunohost app setting $app skipped_uris -d

|

|

||||||

fi

|

fi

|

||||||

|

|

||||||

# Restart services

|

# Restart services

|

||||||

sudo service nginx reload

|

sudo service nginx reload

|

||||||

sudo yunohost app ssowatconf

|

|

||||||

|

|

|

||||||

266

sources/app.js

266

sources/app.js

|

|

@ -1,266 +0,0 @@

|

||||||

'use strict';

|

|

||||||

|

|

||||||

/* jshint node:true */

|

|

||||||

/* jshint browser:false */

|

|

||||||

|

|

||||||

var app = require('app'),

|

|

||||||

path = require('path'),

|

|

||||||

fs = require('fs'),

|

|

||||||

BrowserWindow = require('browser-window'),

|

|

||||||

Menu = require('menu'),

|

|

||||||

Tray = require('tray'),

|

|

||||||

globalShortcut = require('electron').globalShortcut;

|

|

||||||

|

|

||||||

var mainWindow = null,

|

|

||||||

appIcon = null,

|

|

||||||

openFile = process.argv.filter(function(arg) { return /\.kdbx$/i.test(arg); })[0],

|

|

||||||

ready = false,

|

|

||||||

restartPending = false,

|

|

||||||

htmlPath = path.join(__dirname, 'index.html'),

|

|

||||||

mainWindowPosition = {},

|

|

||||||

updateMainWindowPositionTimeout = null,

|

|

||||||

windowPositionFileName = path.join(app.getPath('userData'), 'window-position.json');

|

|

||||||

|

|

||||||

process.argv.forEach(function(arg) {

|

|

||||||

if (arg.lastIndexOf('--htmlpath=', 0) === 0) {

|

|

||||||

htmlPath = path.resolve(arg.replace('--htmlpath=', ''), 'index.html');

|

|

||||||

}

|

|

||||||

});

|

|

||||||

|

|

||||||

app.on('window-all-closed', function() {

|

|

||||||

if (restartPending) {

|

|

||||||

// unbind all handlers, load new app.js module and pass control to it

|

|

||||||

globalShortcut.unregisterAll();

|

|

||||||

app.removeAllListeners('window-all-closed');

|

|

||||||

app.removeAllListeners('ready');

|

|

||||||

app.removeAllListeners('open-file');

|

|

||||||

app.removeAllListeners('activate');

|

|

||||||

var userDataAppFile = path.join(app.getPath('userData'), 'app.js');

|

|

||||||

delete require.cache[require.resolve('./app.js')];

|

|

||||||

require(userDataAppFile);

|

|

||||||

app.emit('ready');

|

|

||||||

} else {

|

|

||||||

if (process.platform !== 'darwin') {

|

|

||||||

app.quit();

|

|

||||||

}

|

|

||||||

}

|

|

||||||

});

|

|

||||||

app.on('ready', function() {

|

|

||||||

setAppOptions();

|

|

||||||

createMainWindow();

|

|

||||||

setGlobalShortcuts();

|

|

||||||

});

|

|

||||||

app.on('open-file', function(e, path) {

|

|

||||||

e.preventDefault();

|

|

||||||

openFile = path;

|

|

||||||

notifyOpenFile();

|

|

||||||

});

|

|

||||||

app.on('activate', function() {

|

|

||||||

if (process.platform === 'darwin') {

|

|

||||||

if (!mainWindow) {

|

|

||||||

createMainWindow();

|

|

||||||

}

|

|

||||||

}

|

|

||||||

});

|

|

||||||

app.on('will-quit', function() {

|

|

||||||

globalShortcut.unregisterAll();

|

|

||||||

});

|

|

||||||

app.restartApp = function() {

|

|

||||||

restartPending = true;

|

|

||||||

mainWindow.close();

|

|

||||||

setTimeout(function() { restartPending = false; }, 1000);

|

|

||||||

};

|

|

||||||

app.openWindow = function(opts) {

|

|

||||||

return new BrowserWindow(opts);

|

|

||||||

};

|

|

||||||

app.minimizeApp = function() {

|

|

||||||

if (process.platform !== 'darwin') {

|

|

||||||

mainWindow.minimize();

|

|

||||||

mainWindow.setSkipTaskbar(true);

|

|

||||||

appIcon = new Tray(path.join(__dirname, 'icon.png'));

|

|

||||||

appIcon.on('click', restoreMainWindow);

|

|

||||||

var contextMenu = Menu.buildFromTemplate([

|

|

||||||

{ label: 'Open KeeWeb', click: restoreMainWindow },

|

|

||||||

{ label: 'Quit KeeWeb', click: closeMainWindow }

|

|

||||||

]);

|

|

||||||

appIcon.setContextMenu(contextMenu);

|

|

||||||

appIcon.setToolTip('KeeWeb');

|

|

||||||

}

|

|

||||||

};

|

|

||||||

app.getMainWindow = function() {

|

|

||||||

return mainWindow;

|

|

||||||

};

|

|

||||||

|

|

||||||

function setAppOptions() {

|

|

||||||

app.commandLine.appendSwitch('disable-background-timer-throttling');

|

|

||||||

}

|

|

||||||

|

|

||||||

function createMainWindow() {

|

|

||||||

mainWindow = new BrowserWindow({

|

|

||||||

show: false,

|

|

||||||

width: 1000, height: 700, 'min-width': 700, 'min-height': 400,

|

|

||||||

icon: path.join(__dirname, 'icon.png')

|

|

||||||

});

|

|

||||||

setMenu();

|

|

||||||

mainWindow.loadURL('file://' + htmlPath);

|

|

||||||

mainWindow.webContents.on('dom-ready', function() {

|

|

||||||

setTimeout(function() {

|

|

||||||

mainWindow.show();

|

|

||||||

ready = true;

|

|

||||||

notifyOpenFile();

|

|

||||||

}, 50);

|

|

||||||

});

|

|

||||||

mainWindow.on('resize', delaySaveMainWindowPosition);

|

|

||||||

mainWindow.on('move', delaySaveMainWindowPosition);

|

|

||||||

mainWindow.on('close', updateMainWindowPositionIfPending);

|

|

||||||

mainWindow.on('closed', function() {

|

|

||||||

mainWindow = null;

|

|

||||||

saveMainWindowPosition();

|

|

||||||

});

|

|

||||||

mainWindow.on('minimize', function() {

|

|

||||||

emitBackboneEvent('launcher-minimize');

|

|

||||||

});

|

|

||||||

restoreMainWindowPosition();

|

|

||||||

}

|

|

||||||

|

|

||||||

function restoreMainWindow() {

|

|

||||||

appIcon.destroy();

|

|

||||||

appIcon = null;

|

|

||||||

mainWindow.restore();

|

|

||||||

mainWindow.setSkipTaskbar(false);

|

|

||||||

}

|

|

||||||

|

|

||||||

function closeMainWindow() {

|

|

||||||

appIcon.destroy();

|

|

||||||

appIcon = null;

|

|

||||||

emitBackboneEvent('launcher-exit-request');

|

|

||||||

}

|

|

||||||

|

|

||||||

function delaySaveMainWindowPosition() {

|

|

||||||

if (updateMainWindowPositionTimeout) {

|

|

||||||

clearTimeout(updateMainWindowPositionTimeout);

|

|

||||||

}

|

|

||||||

updateMainWindowPositionTimeout = setTimeout(updateMainWindowPosition, 500);

|

|

||||||

}

|

|

||||||

|

|

||||||

function updateMainWindowPositionIfPending() {

|

|

||||||

if (updateMainWindowPositionTimeout) {

|

|

||||||

clearTimeout(updateMainWindowPositionTimeout);

|

|

||||||

updateMainWindowPosition();

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

||||||

function updateMainWindowPosition() {

|

|

||||||

if (!mainWindow) {

|

|

||||||

return;

|

|

||||||

}

|

|

||||||

updateMainWindowPositionTimeout = null;

|

|

||||||

var bounds = mainWindow.getBounds();

|

|

||||||

if (!mainWindow.isMaximized() && !mainWindow.isMinimized() && !mainWindow.isFullScreen()) {

|

|

||||||

mainWindowPosition.x = bounds.x;

|

|

||||||

mainWindowPosition.y = bounds.y;

|

|

||||||

mainWindowPosition.width = bounds.width;

|

|

||||||

mainWindowPosition.height = bounds.height;

|

|

||||||

}

|

|

||||||

mainWindowPosition.maximized = mainWindow.isMaximized();

|

|

||||||

mainWindowPosition.fullScreen = mainWindow.isFullScreen();

|

|

||||||

mainWindowPosition.displayBounds = require('screen').getDisplayMatching(bounds).bounds;

|

|

||||||

mainWindowPosition.changed = true;

|

|

||||||

}

|

|

||||||

|

|

||||||

function saveMainWindowPosition() {

|

|

||||||

if (!mainWindowPosition.changed) {

|

|

||||||

return;

|

|

||||||

}

|

|

||||||

delete mainWindowPosition.changed;

|

|

||||||

try {

|

|

||||||

fs.writeFileSync(windowPositionFileName, JSON.stringify(mainWindowPosition), 'utf8');

|

|

||||||

} catch (e) {}

|

|

||||||

}

|

|

||||||

|

|

||||||

function restoreMainWindowPosition() {

|

|

||||||

fs.readFile(windowPositionFileName, 'utf8', function(err, data) {

|

|

||||||

if (data) {

|

|

||||||

mainWindowPosition = JSON.parse(data);

|

|

||||||

if (mainWindow && mainWindowPosition) {

|

|

||||||

if (mainWindowPosition.width && mainWindowPosition.height) {

|

|

||||||

var displayBounds = require('screen').getDisplayMatching(mainWindowPosition).bounds;

|

|

||||||

var db = mainWindowPosition.displayBounds;

|

|

||||||

if (displayBounds.x === db.x && displayBounds.y === db.y &&

|

|

||||||

displayBounds.width === db.width && displayBounds.height === db.height) {

|

|

||||||

mainWindow.setBounds(mainWindowPosition);

|

|

||||||

}

|

|

||||||

}

|

|

||||||

if (mainWindowPosition.maximized) { mainWindow.maximize(); }

|

|

||||||

if (mainWindowPosition.fullScreen) { mainWindow.setFullScreen(true); }

|

|

||||||

}

|

|

||||||

}

|

|

||||||

});

|

|

||||||

}

|

|

||||||

|

|

||||||

function emitBackboneEvent(e) {

|

|

||||||

mainWindow.webContents.executeJavaScript('Backbone.trigger("' + e + '");');

|

|

||||||

}

|

|

||||||

|

|

||||||

function setMenu() {

|

|

||||||

if (process.platform === 'darwin') {

|

|

||||||

var name = require('app').getName();

|

|

||||||

var template = [

|

|

||||||

{

|

|

||||||

label: name,

|

|

||||||

submenu: [

|

|

||||||

{ label: 'About ' + name, role: 'about' },

|

|

||||||

{ type: 'separator' },

|

|

||||||

{ label: 'Services', role: 'services', submenu: [] },

|

|

||||||

{ type: 'separator' },

|

|

||||||

{ label: 'Hide ' + name, accelerator: 'Command+H', role: 'hide' },

|

|

||||||

{ label: 'Hide Others', accelerator: 'Command+Shift+H', role: 'hideothers' },

|

|

||||||

{ label: 'Show All', role: 'unhide' },

|

|

||||||

{ type: 'separator' },

|

|

||||||

{ label: 'Quit', accelerator: 'Command+Q', click: function() { app.quit(); } }

|

|

||||||

]

|

|

||||||

},

|

|

||||||

{

|

|

||||||

label: 'Edit',

|

|

||||||

submenu: [

|

|

||||||

{ label: 'Undo', accelerator: 'CmdOrCtrl+Z', role: 'undo' },

|

|

||||||

{ label: 'Redo', accelerator: 'Shift+CmdOrCtrl+Z', role: 'redo' },

|

|

||||||

{ type: 'separator' },

|

|

||||||

{ label: 'Cut', accelerator: 'CmdOrCtrl+X', role: 'cut' },

|

|

||||||

{ label: 'Copy', accelerator: 'CmdOrCtrl+C', role: 'copy' },

|

|

||||||

{ label: 'Paste', accelerator: 'CmdOrCtrl+V', role: 'paste' },

|

|

||||||

{ label: 'Select All', accelerator: 'CmdOrCtrl+A', role: 'selectall' }

|

|

||||||

]

|

|

||||||

}

|

|

||||||

];

|

|

||||||

var menu = Menu.buildFromTemplate(template);

|

|

||||||

Menu.setApplicationMenu(menu);

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

||||||

function notifyOpenFile() {

|

|

||||||

if (ready && openFile && mainWindow) {

|

|

||||||

openFile = openFile.replace(/"/g, '\\"').replace(/\\/g, '\\\\');

|

|

||||||

mainWindow.webContents.executeJavaScript('if (window.launcherOpen) { window.launcherOpen("' + openFile + '"); } ' +

|

|

||||||

' else { window.launcherOpenedFile="' + openFile + '"; }');

|

|

||||||

openFile = null;

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

||||||

function setGlobalShortcuts() {

|

|

||||||

var shortcutModifiers = process.platform === 'darwin' ? 'Ctrl+Alt+' : 'Shift+Alt+';

|

|

||||||

var shortcuts = {

|

|

||||||

C: 'copy-password',

|

|

||||||

B: 'copy-user',

|

|

||||||

U: 'copy-url'

|

|

||||||

};

|

|

||||||

Object.keys(shortcuts).forEach(function(key) {

|

|

||||||

var shortcut = shortcutModifiers + key;

|

|

||||||

var eventName = shortcuts[key];

|

|

||||||

try {

|

|

||||||

globalShortcut.register(shortcut, function () {

|

|

||||||

emitBackboneEvent(eventName);

|

|

||||||

});

|

|

||||||

} catch (e) {}

|

|

||||||

});

|

|

||||||

}

|

|

||||||

BIN

sources/icon.png

BIN

sources/icon.png

Binary file not shown.

|

Before Width: | Height: | Size: 7.4 KiB |

File diff suppressed because one or more lines are too long

|

|

@ -1,43 +0,0 @@

|

||||||

// KeeWeb launcher script

|

|

||||||

|

|

||||||

// This script is distributed with the app and is its entry point

|

|

||||||

// It checks whether the app is available in userData folder and if its version is higher than local, launches it

|

|

||||||

// This script is the only part which will be updated only with the app itself, auto-update will not change it

|

|

||||||

|

|

||||||

// (C) Antelle 2015, MIT license https://github.com/antelle/keeweb

|

|

||||||

|

|

||||||

'use strict';

|

|

||||||

|

|

||||||

/* jshint node:true */

|

|

||||||

/* jshint browser:false */

|

|

||||||

|

|

||||||

var app = require('app'),

|

|

||||||

path = require('path'),

|

|

||||||

fs = require('fs');

|

|

||||||

|

|

||||||

var userDataDir = app.getPath('userData'),

|

|

||||||

appPathUserData = path.join(userDataDir, 'app.js'),

|

|

||||||

appPath = path.join(__dirname, 'app.js');

|

|

||||||

|

|

||||||

if (fs.existsSync(appPathUserData)) {

|

|

||||||

var versionLocal = require('./package.json').version;

|

|

||||||

try {

|

|

||||||

var versionUserData = require(path.join(userDataDir, 'package.json')).version;

|

|

||||||

versionLocal = versionLocal.split('.');

|

|

||||||

versionUserData = versionUserData.split('.');

|

|

||||||

for (var i = 0; i < versionLocal.length; i++) {

|

|

||||||

if (+versionUserData[i] > +versionLocal[i]) {

|

|

||||||

appPath = appPathUserData;

|

|

||||||

break;

|

|

||||||

}

|

|

||||||

if (+versionUserData[i] < +versionLocal[i]) {

|

|

||||||

break;

|

|

||||||

}

|

|

||||||

}

|

|

||||||

}

|

|

||||||

catch (e) {

|

|

||||||

console.error('Error reading user file version', e);

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

||||||

require(appPath);

|

|

||||||

78

sources/node_modules/node-stream-zip/README.md

generated

vendored

78

sources/node_modules/node-stream-zip/README.md

generated

vendored

|

|

@ -1,78 +0,0 @@

|

||||||

# node-stream-zip [](https://travis-ci.org/antelle/node-stream-zip)

|

|

||||||

|

|

||||||

node.js library for reading and extraction of ZIP archives.

|

|

||||||

Features:

|

|

||||||

|

|

||||||

- it never loads entire archive into memory, everything is read by chunks

|

|

||||||

- large archives support

|

|

||||||

- all operations are non-blocking, no sync i/o

|

|

||||||

- fast initialization

|

|

||||||

- no dependencies, no binary addons

|

|

||||||

- decompression with built-in zlib module

|

|

||||||

- deflate, deflate64, sfx, macosx/windows built-in archives

|

|

||||||

- ZIP64 support

|

|

||||||

|

|

||||||

# Installation

|

|

||||||

|

|

||||||

`$ npm install node-stream-zip`

|

|

||||||

|

|

||||||

# Usage

|

|

||||||

|

|

||||||

```javascript

|

|

||||||

var StreamZip = require('node-stream-zip');

|

|

||||||

var zip = new StreamZip({

|

|

||||||

file: 'archive.zip',

|

|

||||||

storeEntries: true

|

|

||||||

});

|

|

||||||

zip.on('error', function(err) { /*handle*/ });

|

|

||||||

zip.on('ready', function() {

|

|

||||||

console.log('Entries read: ' + zip.entriesCount);

|

|

||||||

// stream to stdout

|

|

||||||

zip.stream('node/benchmark/net/tcp-raw-c2s.js', function(err, stm) {

|

|

||||||

stm.pipe(process.stdout);

|

|

||||||

});

|

|

||||||

// extract file

|

|

||||||

zip.extract('node/benchmark/net/tcp-raw-c2s.js', './temp/', function(err) {

|

|

||||||

console.log('Entry extracted');

|

|

||||||

});

|

|

||||||

// extract folder

|

|

||||||

zip.extract('node/benchmark/', './temp/', function(err, count) {

|

|

||||||

console.log('Extracted ' + count + ' entries');

|

|

||||||

});

|

|

||||||

// extract all

|

|

||||||

zip.extract(null, './temp/', function(err, count) {

|

|

||||||

console.log('Extracted ' + count + ' entries');

|

|

||||||

});

|

|

||||||

// read file as buffer in sync way

|

|

||||||

var data = zip.entryDataSync('README.md');

|

|

||||||

});

|

|

||||||

zip.on('extract', function(entry, file) {

|

|

||||||

console.log('Extracted ' + entry.name + ' to ' + file);

|

|

||||||

});

|

|

||||||

zip.on('entry', function(entry) {

|

|

||||||

// called on load, when entry description has been read

|

|

||||||

// you can already stream this entry, without waiting until all entry descriptions are read (suitable for very large archives)

|

|

||||||

console.log('Read entry ', entry.name);

|

|

||||||

});

|

|

||||||

```

|

|

||||||

|

|

||||||

If you pass `storeEntries: true` to constructor, you will be able to access entries inside zip archive with:

|

|

||||||

|

|

||||||

- `zip.entries()` - get all entries description

|

|

||||||

- `zip.entry(name)` - get entry description by name

|

|

||||||

- `zip.stream(entry, function(err, stm) { })` - get entry data reader stream

|

|

||||||

- `zip.entryDataSync(entry)` - get entry data in sync way

|

|

||||||

|

|

||||||

# Building

|

|

||||||

|

|

||||||

The project doesn't require building. To run unit tests with [nodeunit](https://github.com/caolan/nodeunit):

|

|

||||||

`$ npm test`

|

|

||||||

|

|

||||||

# Known issues

|

|

||||||

|

|

||||||

- [utf8](https://github.com/rubyzip/rubyzip/wiki/Files-with-non-ascii-filenames) file names

|

|

||||||

- AES encrypted files

|

|

||||||

|

|

||||||

# Contributors

|

|

||||||

|

|

||||||

ZIP parsing code has been partially forked from [cthackers/adm-zip](https://github.com/cthackers/adm-zip) (MIT license).

|

|

||||||

977

sources/node_modules/node-stream-zip/node_stream_zip.js

generated

vendored

977

sources/node_modules/node-stream-zip/node_stream_zip.js

generated

vendored

|

|

@ -1,977 +0,0 @@

|

||||||

/**

|

|

||||||

* @license node-stream-zip | (c) 2015 Antelle | https://github.com/antelle/node-stream-zip/blob/master/MIT-LICENSE.txt

|

|

||||||

* Portions copyright https://github.com/cthackers/adm-zip | https://raw.githubusercontent.com/cthackers/adm-zip/master/MIT-LICENSE.txt

|

|

||||||

*/

|

|

||||||

|

|

||||||

// region Deps

|

|

||||||

|

|

||||||

var

|

|

||||||

util = require('util'),

|

|

||||||

fs = require('fs'),

|

|

||||||

path = require('path'),

|

|

||||||

events = require('events'),

|

|

||||||

zlib = require('zlib'),

|

|

||||||

stream = require('stream');

|

|

||||||

|

|

||||||

// endregion

|

|

||||||

|

|

||||||

// region Constants

|

|

||||||

|

|

||||||

var consts = {

|

|

||||||

/* The local file header */

|

|

||||||

LOCHDR : 30, // LOC header size

|

|

||||||

LOCSIG : 0x04034b50, // "PK\003\004"

|

|

||||||

LOCVER : 4, // version needed to extract

|

|

||||||

LOCFLG : 6, // general purpose bit flag

|

|

||||||

LOCHOW : 8, // compression method

|

|

||||||

LOCTIM : 10, // modification time (2 bytes time, 2 bytes date)

|

|

||||||

LOCCRC : 14, // uncompressed file crc-32 value

|

|

||||||

LOCSIZ : 18, // compressed size

|

|

||||||

LOCLEN : 22, // uncompressed size

|

|

||||||

LOCNAM : 26, // filename length

|

|

||||||

LOCEXT : 28, // extra field length

|

|

||||||

|

|

||||||

/* The Data descriptor */

|

|

||||||

EXTSIG : 0x08074b50, // "PK\007\008"

|

|

||||||

EXTHDR : 16, // EXT header size

|

|

||||||

EXTCRC : 4, // uncompressed file crc-32 value

|

|

||||||

EXTSIZ : 8, // compressed size

|

|

||||||

EXTLEN : 12, // uncompressed size

|

|

||||||

|

|

||||||

/* The central directory file header */

|

|

||||||

CENHDR : 46, // CEN header size

|

|

||||||

CENSIG : 0x02014b50, // "PK\001\002"

|

|

||||||

CENVEM : 4, // version made by

|

|

||||||

CENVER : 6, // version needed to extract

|

|

||||||

CENFLG : 8, // encrypt, decrypt flags

|

|

||||||

CENHOW : 10, // compression method

|

|

||||||

CENTIM : 12, // modification time (2 bytes time, 2 bytes date)

|

|

||||||

CENCRC : 16, // uncompressed file crc-32 value

|

|

||||||

CENSIZ : 20, // compressed size

|

|

||||||

CENLEN : 24, // uncompressed size

|

|

||||||

CENNAM : 28, // filename length

|

|

||||||

CENEXT : 30, // extra field length

|

|

||||||

CENCOM : 32, // file comment length

|

|

||||||

CENDSK : 34, // volume number start

|

|

||||||

CENATT : 36, // internal file attributes

|

|

||||||

CENATX : 38, // external file attributes (host system dependent)

|

|

||||||

CENOFF : 42, // LOC header offset

|

|

||||||

|

|

||||||

/* The entries in the end of central directory */

|

|

||||||

ENDHDR : 22, // END header size

|

|

||||||

ENDSIG : 0x06054b50, // "PK\005\006"

|

|

||||||

ENDSIGFIRST : 0x50,

|

|

||||||

ENDSUB : 8, // number of entries on this disk

|

|

||||||

ENDTOT : 10, // total number of entries

|

|

||||||

ENDSIZ : 12, // central directory size in bytes

|

|

||||||

ENDOFF : 16, // offset of first CEN header

|

|

||||||

ENDCOM : 20, // zip file comment length

|

|

||||||

MAXFILECOMMENT : 0xFFFF,

|

|

||||||

|

|

||||||

/* The entries in the end of ZIP64 central directory locator */

|

|

||||||

ENDL64HDR : 20, // ZIP64 end of central directory locator header size

|

|

||||||

ENDL64SIG : 0x07064b50, // ZIP64 end of central directory locator signature

|

|

||||||

ENDL64SIGFIRST : 0x50,

|

|

||||||

ENDL64OFS : 8, // ZIP64 end of central directory offset

|

|

||||||

|

|

||||||

/* The entries in the end of ZIP64 central directory */

|

|

||||||

END64HDR : 56, // ZIP64 end of central directory header size

|

|

||||||

END64SIG : 0x06064b50, // ZIP64 end of central directory signature

|

|

||||||

END64SIGFIRST : 0x50,

|

|

||||||

END64SUB : 24, // number of entries on this disk

|

|

||||||

END64TOT : 32, // total number of entries

|

|

||||||

END64SIZ : 40,

|

|

||||||

END64OFF : 48,

|

|

||||||

|

|

||||||

/* Compression methods */

|

|

||||||

STORED : 0, // no compression

|

|

||||||

SHRUNK : 1, // shrunk

|

|

||||||

REDUCED1 : 2, // reduced with compression factor 1

|

|

||||||

REDUCED2 : 3, // reduced with compression factor 2

|

|

||||||

REDUCED3 : 4, // reduced with compression factor 3

|

|

||||||

REDUCED4 : 5, // reduced with compression factor 4

|

|

||||||

IMPLODED : 6, // imploded

|

|

||||||

// 7 reserved

|

|

||||||

DEFLATED : 8, // deflated

|

|

||||||

ENHANCED_DEFLATED: 9, // enhanced deflated

|

|

||||||

PKWARE : 10,// PKWare DCL imploded

|

|

||||||

// 11 reserved

|

|

||||||

BZIP2 : 12, // compressed using BZIP2

|

|

||||||

// 13 reserved

|

|

||||||

LZMA : 14, // LZMA

|

|

||||||

// 15-17 reserved

|

|

||||||

IBM_TERSE : 18, // compressed using IBM TERSE

|

|

||||||

IBM_LZ77 : 19, //IBM LZ77 z

|

|

||||||

|

|

||||||

/* General purpose bit flag */

|

|

||||||

FLG_ENC : 0, // encrypted file

|

|

||||||

FLG_COMP1 : 1, // compression option

|

|

||||||

FLG_COMP2 : 2, // compression option

|

|

||||||

FLG_DESC : 4, // data descriptor

|

|

||||||

FLG_ENH : 8, // enhanced deflation

|

|

||||||

FLG_STR : 16, // strong encryption

|

|

||||||

FLG_LNG : 1024, // language encoding

|

|

||||||

FLG_MSK : 4096, // mask header values

|

|

||||||

FLG_ENTRY_ENC : 1,

|

|

||||||

|

|

||||||

/* 4.5 Extensible data fields */

|

|

||||||

EF_ID : 0,

|

|

||||||

EF_SIZE : 2,

|

|

||||||

|

|

||||||

/* Header IDs */

|

|

||||||

ID_ZIP64 : 0x0001,

|

|

||||||

ID_AVINFO : 0x0007,

|

|

||||||

ID_PFS : 0x0008,

|

|

||||||

ID_OS2 : 0x0009,

|

|

||||||

ID_NTFS : 0x000a,

|

|

||||||

ID_OPENVMS : 0x000c,

|

|

||||||

ID_UNIX : 0x000d,

|

|

||||||

ID_FORK : 0x000e,

|

|

||||||

ID_PATCH : 0x000f,

|

|

||||||

ID_X509_PKCS7 : 0x0014,

|

|

||||||

ID_X509_CERTID_F : 0x0015,

|

|

||||||

ID_X509_CERTID_C : 0x0016,

|

|

||||||

ID_STRONGENC : 0x0017,

|

|

||||||

ID_RECORD_MGT : 0x0018,

|

|

||||||

ID_X509_PKCS7_RL : 0x0019,

|

|

||||||

ID_IBM1 : 0x0065,

|

|

||||||

ID_IBM2 : 0x0066,

|

|

||||||

ID_POSZIP : 0x4690,

|

|

||||||

|

|

||||||

EF_ZIP64_OR_32 : 0xffffffff,

|

|

||||||

EF_ZIP64_OR_16 : 0xffff

|

|

||||||

};

|

|

||||||

|

|

||||||

// endregion

|

|

||||||

|

|

||||||

// region StreamZip

|

|

||||||

|

|

||||||

var StreamZip = function(config) {

|

|

||||||

var

|

|

||||||

fd,

|

|

||||||

fileSize,

|

|

||||||

chunkSize,

|

|

||||||

ready = false,

|

|

||||||

that = this,

|

|

||||||

op,

|

|

||||||

centralDirectory,

|

|

||||||

|

|

||||||

entries = config.storeEntries !== false ? {} : null,

|

|

||||||

fileName = config.file;

|

|

||||||

|

|

||||||

open();

|

|

||||||

|

|

||||||

function open() {

|

|

||||||

fs.open(fileName, 'r', function(err, f) {

|

|

||||||

if (err)

|

|

||||||

return that.emit('error', err);

|

|

||||||

fd = f;

|

|

||||||

fs.fstat(fd, function(err, stat) {

|

|

||||||

if (err)

|

|

||||||

return that.emit('error', err);

|

|

||||||

fileSize = stat.size;

|

|

||||||

chunkSize = config.chunkSize || Math.round(fileSize / 1000);

|

|

||||||

chunkSize = Math.max(Math.min(chunkSize, Math.min(128*1024, fileSize)), Math.min(1024, fileSize));

|

|

||||||

readCentralDirectory();

|

|

||||||

});

|

|

||||||

});

|

|

||||||

}

|

|

||||||

|

|

||||||

function readUntilFoundCallback(err, bytesRead) {

|

|

||||||

if (err || !bytesRead)

|

|

||||||

return that.emit('error', err || 'Archive read error');

|

|

||||||

var

|

|

||||||

buffer = op.win.buffer,

|

|

||||||

pos = op.lastPos,

|

|

||||||

bufferPosition = pos - op.win.position,

|

|

||||||

minPos = op.minPos;

|

|

||||||

while (--pos >= minPos && --bufferPosition >= 0) {

|

|

||||||

if (buffer[bufferPosition] === op.firstByte) { // quick check first signature byte

|

|

||||||

if (buffer.readUInt32LE(bufferPosition) === op.sig) {

|

|

||||||

op.lastBufferPosition = bufferPosition;

|

|

||||||

op.lastBytesRead = bytesRead;

|

|

||||||

op.complete();

|

|

||||||

return;

|

|

||||||

}

|

|

||||||

}

|

|

||||||

}

|

|

||||||

if (pos === minPos) {

|

|

||||||

return that.emit('error', 'Bad archive');

|

|

||||||

}

|

|

||||||

op.lastPos = pos + 1;

|

|

||||||

op.chunkSize *= 2;

|

|

||||||

if (pos <= minPos)

|

|

||||||

return that.emit('error', 'Bad archive');

|

|

||||||

var expandLength = Math.min(op.chunkSize, pos - minPos);

|

|

||||||

op.win.expandLeft(expandLength, readUntilFoundCallback);

|

|

||||||

|

|

||||||

}

|

|

||||||

|

|

||||||

function readCentralDirectory() {

|

|

||||||

var totalReadLength = Math.min(consts.ENDHDR + consts.MAXFILECOMMENT, fileSize);

|

|

||||||

op = {

|

|

||||||

win: new FileWindowBuffer(fd),

|

|

||||||

totalReadLength: totalReadLength,

|

|

||||||

minPos: fileSize - totalReadLength,

|

|

||||||

lastPos: fileSize,

|

|

||||||

chunkSize: Math.min(1024, chunkSize),

|

|

||||||

firstByte: consts.ENDSIGFIRST,

|

|

||||||

sig: consts.ENDSIG,

|

|

||||||

complete: readCentralDirectoryComplete

|

|

||||||

};

|

|

||||||

op.win.read(fileSize - op.chunkSize, op.chunkSize, readUntilFoundCallback);

|

|

||||||

}

|

|

||||||

|

|

||||||

function readCentralDirectoryComplete() {

|

|

||||||

var buffer = op.win.buffer;

|

|

||||||

var pos = op.lastBufferPosition;

|

|

||||||

try {

|

|

||||||

centralDirectory = new CentralDirectoryHeader();

|

|

||||||

centralDirectory.read(buffer.slice(pos, pos + consts.ENDHDR));

|

|

||||||

centralDirectory.headerOffset = op.win.position + pos;

|

|

||||||

if (centralDirectory.commentLength)

|

|

||||||

that.comment = buffer.slice(pos + consts.ENDHDR,

|

|

||||||

pos + consts.ENDHDR + centralDirectory.commentLength).toString();

|

|

||||||

else

|

|

||||||

that.comment = null;

|

|

||||||

that.entriesCount = centralDirectory.volumeEntries;

|

|

||||||

that.centralDirectory = centralDirectory;

|

|

||||||

if (centralDirectory.volumeEntries === consts.EF_ZIP64_OR_16 && centralDirectory.totalEntries === consts.EF_ZIP64_OR_16

|

|

||||||

|| centralDirectory.size === consts.EF_ZIP64_OR_32 || centralDirectory.offset === consts.EF_ZIP64_OR_32) {

|

|

||||||

readZip64CentralDirectoryLocator();

|

|

||||||

} else {

|

|

||||||

op = {};

|

|

||||||

readEntries();

|

|

||||||

}

|

|

||||||

} catch (err) {

|

|

||||||

that.emit('error', err);

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

||||||

function readZip64CentralDirectoryLocator() {

|

|

||||||

var length = consts.ENDL64HDR;

|

|

||||||

if (op.lastBufferPosition > length) {

|

|

||||||

op.lastBufferPosition -= length;

|

|

||||||

readZip64CentralDirectoryLocatorComplete();

|

|

||||||

} else {

|

|

||||||

op = {

|

|

||||||

win: op.win,

|

|

||||||

totalReadLength: length,

|

|

||||||

minPos: op.win.position - length,

|

|

||||||

lastPos: op.win.position,

|

|

||||||

chunkSize: op.chunkSize,

|

|

||||||

firstByte: consts.ENDL64SIGFIRST,

|

|

||||||

sig: consts.ENDL64SIG,

|

|

||||||

complete: readZip64CentralDirectoryLocatorComplete

|

|

||||||

};

|

|

||||||

op.win.read(op.lastPos - op.chunkSize, op.chunkSize, readUntilFoundCallback);

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

||||||

function readZip64CentralDirectoryLocatorComplete() {

|

|

||||||

var buffer = op.win.buffer;

|

|

||||||

var locHeader = new CentralDirectoryLoc64Header();

|

|

||||||

locHeader.read(buffer.slice(op.lastBufferPosition, op.lastBufferPosition + consts.ENDL64HDR));

|

|

||||||

var readLength = fileSize - locHeader.headerOffset;

|

|

||||||

op = {

|

|

||||||

win: op.win,

|

|

||||||

totalReadLength: readLength,

|

|

||||||

minPos: locHeader.headerOffset,

|

|

||||||

lastPos: op.lastPos,

|

|

||||||

chunkSize: op.chunkSize,

|

|

||||||

firstByte: consts.END64SIGFIRST,

|

|

||||||

sig: consts.END64SIG,

|

|

||||||

complete: readZip64CentralDirectoryComplete

|

|

||||||

};

|

|

||||||

op.win.read(fileSize - op.chunkSize, op.chunkSize, readUntilFoundCallback);

|

|

||||||

}

|

|

||||||

|

|

||||||

function readZip64CentralDirectoryComplete() {

|

|

||||||

var buffer = op.win.buffer;

|

|

||||||

var zip64cd = new CentralDirectoryZip64Header();

|

|

||||||

zip64cd.read(buffer.slice(op.lastBufferPosition, op.lastBufferPosition + consts.END64HDR));

|

|

||||||

that.centralDirectory.volumeEntries = zip64cd.volumeEntries;

|

|

||||||

that.centralDirectory.totalEntries = zip64cd.totalEntries;

|

|

||||||

that.centralDirectory.size = zip64cd.size;

|

|

||||||

that.centralDirectory.offset = zip64cd.offset;

|

|

||||||

that.entriesCount = zip64cd.volumeEntries;

|

|

||||||

op = {};

|

|

||||||

readEntries();

|

|

||||||

}

|

|

||||||

|

|

||||||

function readEntries() {

|

|

||||||

op = {

|

|

||||||

win: new FileWindowBuffer(fd),

|

|

||||||

pos: centralDirectory.offset,

|

|

||||||

chunkSize: chunkSize,

|

|

||||||

entriesLeft: centralDirectory.volumeEntries

|

|

||||||

};

|

|

||||||

op.win.read(op.pos, Math.min(chunkSize, fileSize - op.pos), readEntriesCallback);

|

|

||||||

}

|

|

||||||

|

|

||||||

function readEntriesCallback(err, bytesRead) {

|

|

||||||

if (err || !bytesRead)

|

|

||||||

return that.emit('error', err || 'Entries read error');

|

|

||||||

var

|

|

||||||

buffer = op.win.buffer,

|

|

||||||

bufferPos = op.pos - op.win.position,

|

|

||||||

bufferLength = buffer.length,

|

|

||||||

entry = op.entry;

|

|

||||||

try {

|

|

||||||

while (op.entriesLeft > 0) {

|

|

||||||

if (!entry) {

|

|

||||||

entry = new ZipEntry();

|

|

||||||

entry.readHeader(buffer, bufferPos);

|

|

||||||

entry.headerOffset = op.win.position + bufferPos;

|

|

||||||

op.entry = entry;

|

|

||||||

op.pos += consts.CENHDR;

|

|

||||||

bufferPos += consts.CENHDR;

|

|

||||||

}

|

|

||||||

var entryHeaderSize = entry.fnameLen + entry.extraLen + entry.comLen;

|

|

||||||

var advanceBytes = entryHeaderSize + (op.entriesLeft > 1 ? consts.CENHDR : 0);

|

|

||||||

if (bufferLength - bufferPos < advanceBytes) {

|

|

||||||

op.win.moveRight(chunkSize, readEntriesCallback, bufferPos);

|

|

||||||

op.move = true;

|

|

||||||

return;

|

|

||||||

}

|

|

||||||

entry.read(buffer, bufferPos);

|

|

||||||

if (entries)

|

|

||||||

entries[entry.name] = entry;

|

|

||||||

that.emit('entry', entry);

|

|

||||||

op.entry = entry = null;

|

|

||||||

op.entriesLeft--;

|

|

||||||

op.pos += entryHeaderSize;

|

|

||||||

bufferPos += entryHeaderSize;

|

|

||||||

}

|

|

||||||

that.emit('ready');

|

|

||||||

} catch (err) {

|

|

||||||

that.emit('error', err);

|

|

||||||

}

|

|

||||||

}

|

|

||||||

|

|

||||||

function checkEntriesExist() {

|

|

||||||

if (!entries)

|

|

||||||

throw 'storeEntries disabled';

|

|

||||||

}

|

|

||||||

|

|

||||||

Object.defineProperty(this, 'ready', { get: function() { return ready; } });

|

|

||||||

|

|

||||||

this.entry = function(name) {

|

|

||||||

checkEntriesExist();

|

|

||||||

return entries[name];

|

|

||||||

};

|

|

||||||

|

|

||||||

this.entries = function() {

|

|

||||||

checkEntriesExist();

|

|

||||||

return entries;

|

|

||||||

};

|

|

||||||

|

|

||||||

this.stream = function(entry, callback) {

|

|

||||||

return openEntry(entry, function(err, entry) {

|

|

||||||

if (err)

|

|

||||||

return callback(err);

|

|

||||||

var offset = dataOffset(entry);

|

|

||||||

var entryStream = new EntryDataReaderStream(fd, offset, entry.compressedSize);

|

|

||||||

if (entry.method === consts.STORED) {

|

|

||||||

} else if (entry.method === consts.DEFLATED || entry.method === consts.ENHANCED_DEFLATED) {

|

|

||||||

entryStream = entryStream.pipe(zlib.createInflateRaw());

|

|

||||||

} else {

|

|

||||||